The AI Security Challenge

As AI adoption explodes, employees are sharing sensitive data with AI chatbots without realizing the risks. DataFence provides the first line of defense against AI-related data breaches.

Monitor All AI Interactions

DataFence monitors text inputs and file uploads to all major AI platforms, including:

- ChatGPT: OpenAI's conversational AI

- Claude: Anthropic's AI assistant

- Gemini: Google's multimodal AI

- GitHub Copilot: AI-powered coding assistant

- And more: Perplexity, Midjourney, and emerging AI tools

Intelligent Content Filtering

Our AI-powered engine detects and blocks sensitive information before it reaches AI platforms:

- Source code: Protect proprietary algorithms and IP

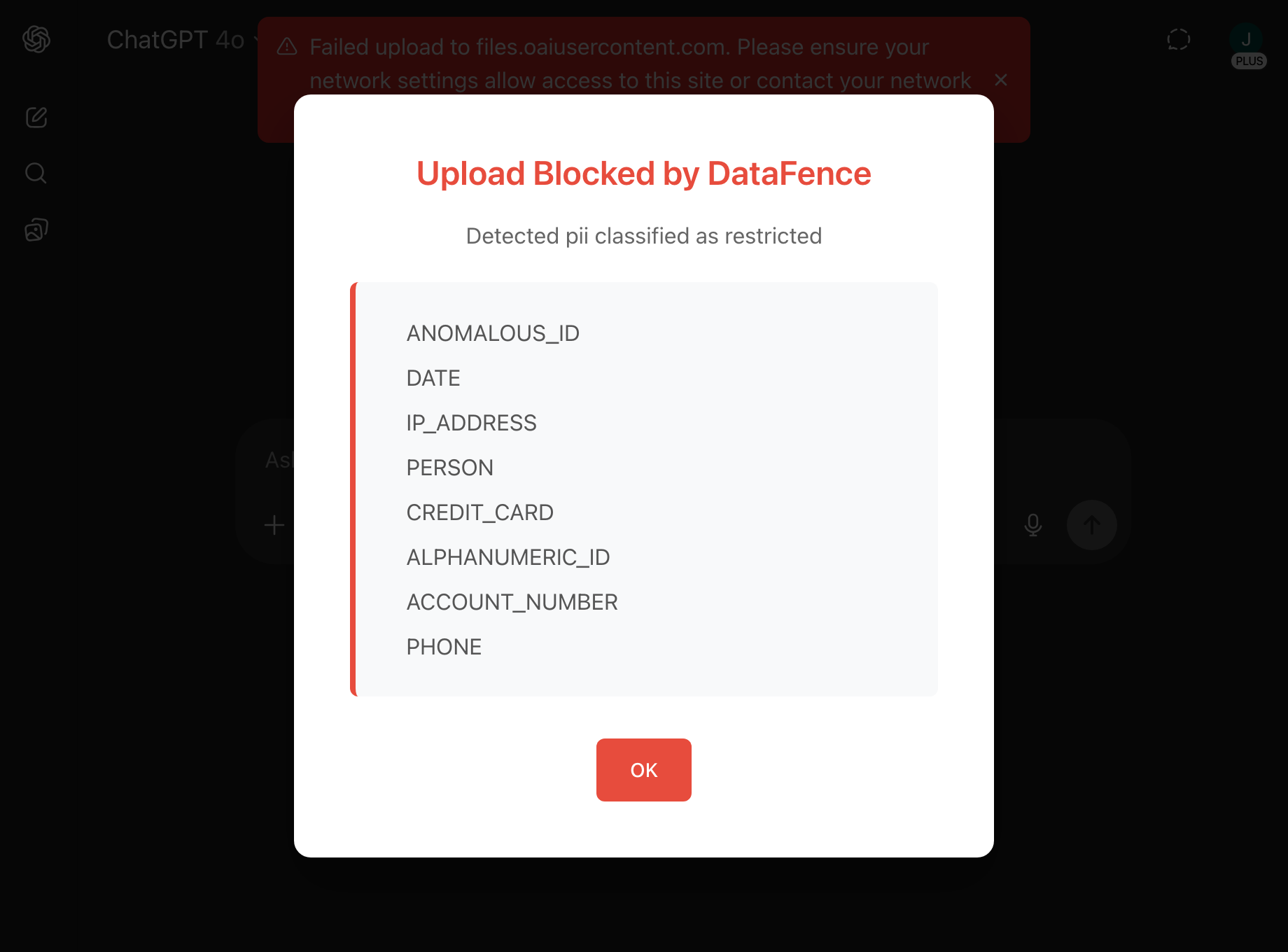

- PII/PHI: Block personal and health information

- Credentials: Prevent API keys and passwords from exposure

- Confidential docs: Stop contracts and strategies from leaking

Real AI Security Risks Your Organization Faces

Samsung's $1.2B Code Leak

Engineers pasted proprietary semiconductor code into ChatGPT, exposing critical IP to OpenAI's training data.

Healthcare PHI Exposure

Medical staff using AI to summarize patient notes inadvertently shared protected health information.

Financial Data Breach

Analysts uploading earnings reports to AI tools before public release, risking insider trading violations.

Legal Document Leaks

Law firms sharing confidential client contracts with AI for review, breaching attorney-client privilege.

AI Protection Features

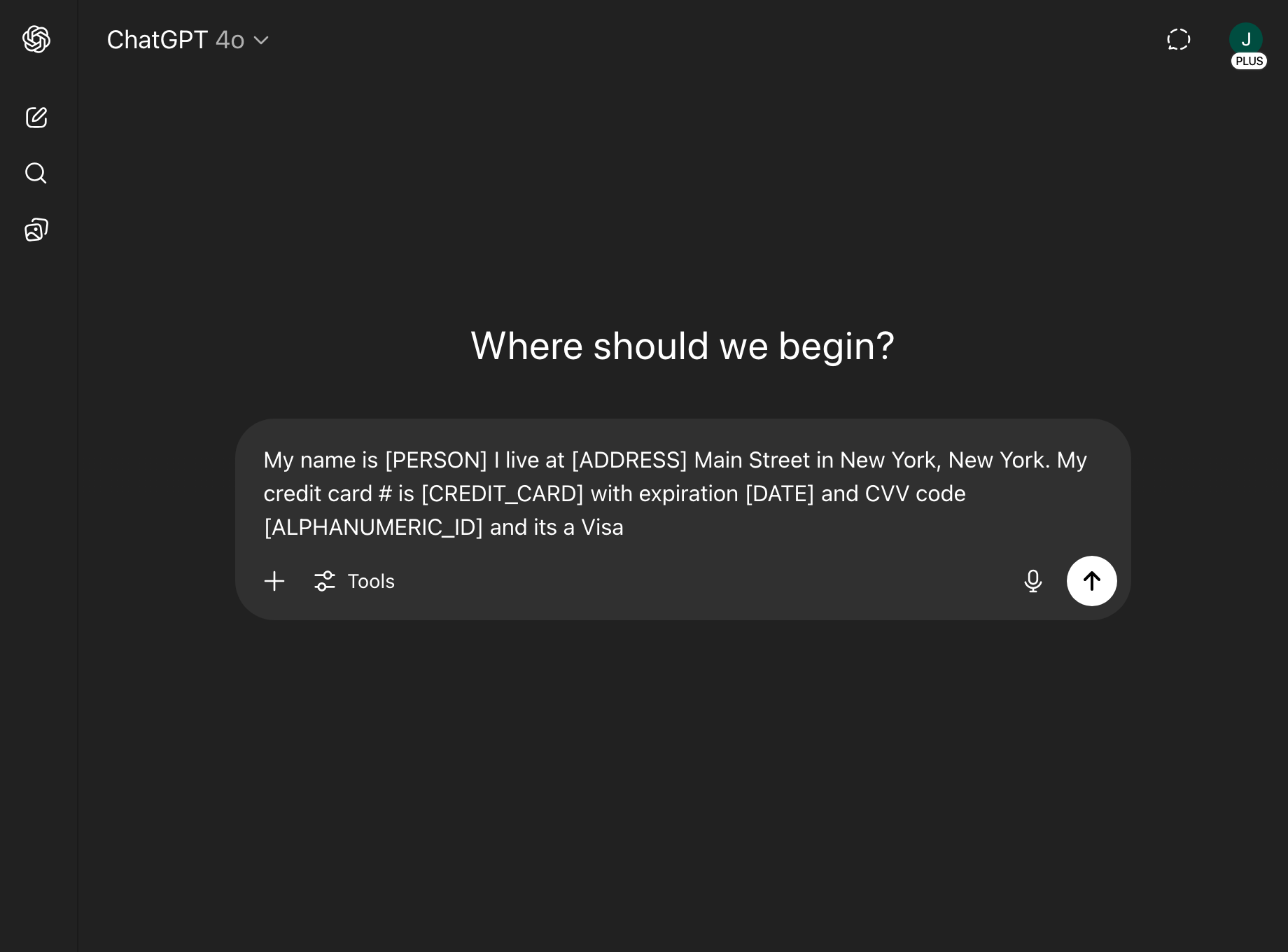

Paste Protection

Monitor and block sensitive data being pasted into AI chat interfaces, preventing accidental exposure of confidential information.

File Upload Blocking

Prevent documents, spreadsheets, and code files containing sensitive data from being uploaded to AI platforms.

User Education

Real-time warnings educate users about AI risks when they attempt to share sensitive information.

Flexible Policies

Create granular rules by AI platform, user group, or data type. Allow safe AI use while blocking risky behavior.

Usage Analytics

Understand how your organization uses AI tools with detailed analytics and identify potential security gaps.

API Integration

Extend protection to custom AI implementations and internal tools with our comprehensive API.

Easy Implementation in 3 Steps

Deploy Extension

Roll out DataFence browser extension to all employees via group policy or MDM.

Configure Policies

Set rules for AI platforms based on your security requirements and use cases.

Monitor & Refine

Review analytics, adjust policies, and ensure safe AI adoption across your organization.